K8s with external Ceph, disaster recovery, and StorageClass migration

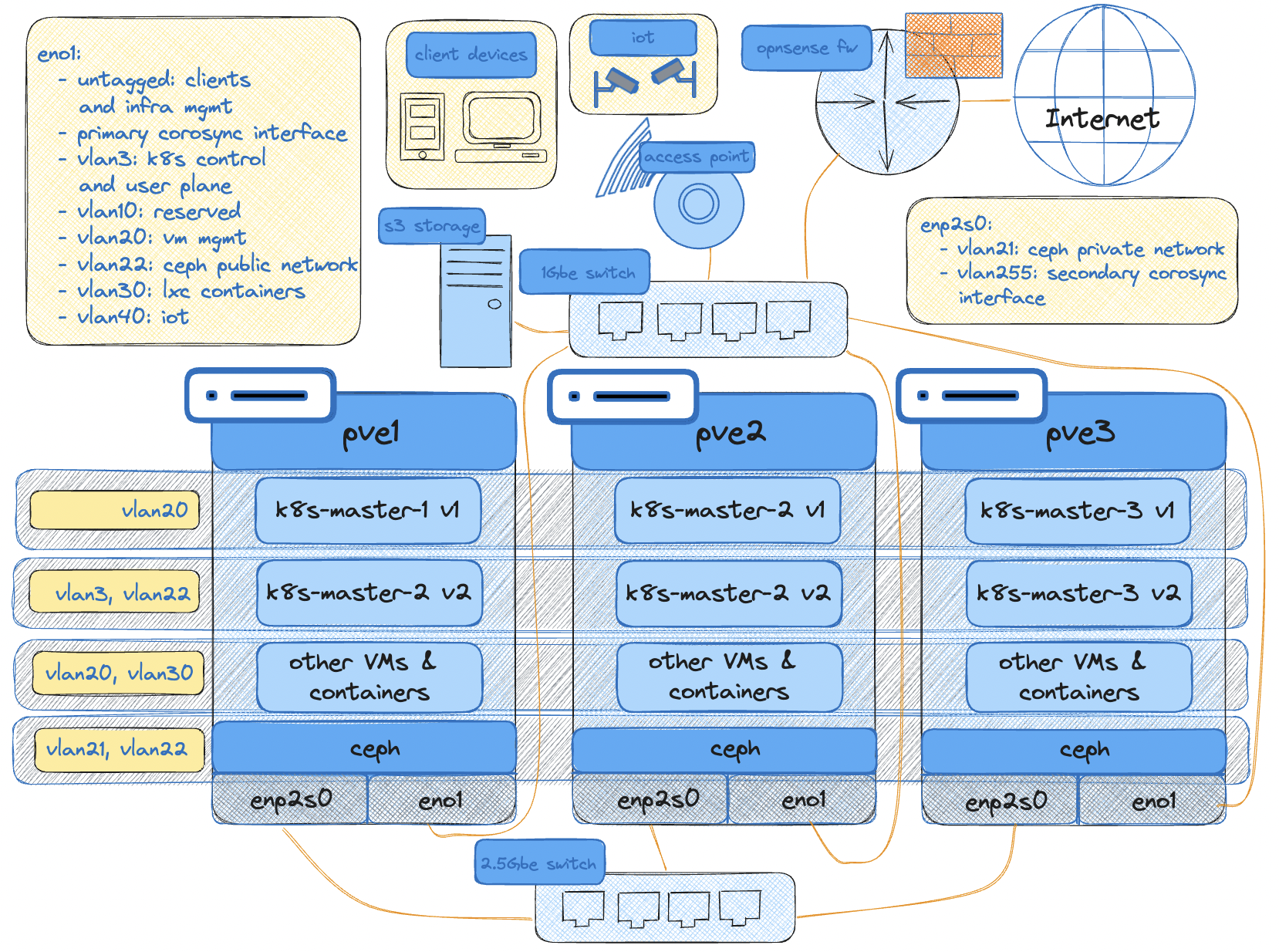

In the past couple of weeks I was able to source matching mini USFF PCs which upgrades the mini homelab from 14 CPU cores to 18! Along with this I decided to attach a 2.5Gbe NIC and a 1TB NVME on each device to be used for Ceph allowing for hyper-converged infrastructure. Ceph on its own is a huge topic. It has so many moving parts-monitors, metadata servers, OSDs, placement groups to name a few....