The past months have been crazy since the induction of my homelab. There have been so much reading here and there and a bulk of what used to be my idle time has since been allotted to technical research and self-development. My writing has not been able to keep up either because there have been a lot of changes and modifications I’ve been doing from the get-go.

The network just reached the fourth month mark and it’s already about to undergo a somewhat major re-design. But just before going to the next chapter of the journey, I want to take this time to log the current state of the existing infrastructure and services. Don’t get me wrong — I don’t run a full-fledged data center, nor do I even have a server rack setup. I only have photos and videos as critical files and I only have two users at home including myself (lol). It’s called a homelab for a reason. Though it would still be fun to document the progress be it big or small and know how far I’ve come along the way.

Homelab Networking and Hardware

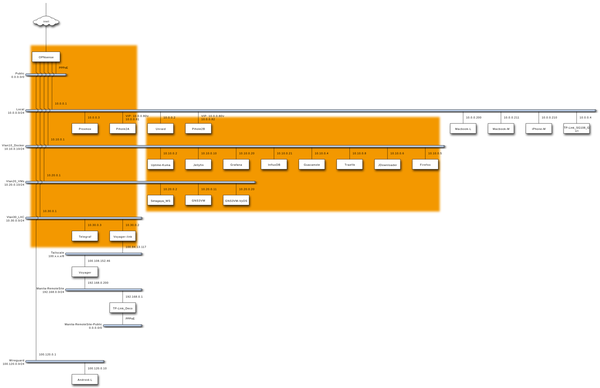

On high level, below is the current diagram of existing services, subnets, VLANs, and devices.

| VLAN | Traffic type | Services |

|---|---|---|

| Untagged | Local | Local devices, DNS |

| 10 | Docker | Traefik, UptimeKuma, InfluxDB, Grafana, Code-server, Firefox, Guacamole, JDownloader, Jellyfin, Krusader, Wiki.js |

| 20 | VM | Setagaya Workstation (PopOS), GNS3 server (Ubuntu 22.04), Voyager-staging (staging environment for remote server), Windows 10 |

| 30 | LXC | Tailscale, Deluge |

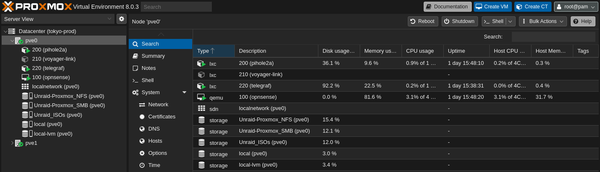

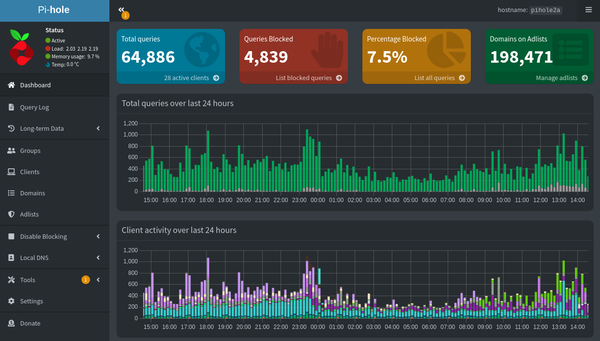

Orange boxes signify the servers running a hypervisor — one Proxmox, and the other, Unraid. The Proxmox box is mainly running my network stack which includes OPNsense, a LXC container running Pihole as my DNS, and another one running tailscale for a machine-to-machine VPN with the remote peer acting as an exit node. While it might be possible to run tailscale from within OPNsense, I decided to configure it separately since I only have a simple use case of tunneling torrent traffic to a small thin client back home in Manila.

On the other hand, the box running Unraid primarily runs our NAS and a couple of docker containers and VMs. It’s been very stable, running without any hiccup except for the time when all containers and VMs together with all the other devices were on a single subnet. This is what pushed me to segregate the traffic with VLANs. Speaking of, this is easily made possible by a cheap “smart” (you can say it’s managed) switch from TP-Link. I can’t stress enough how much I love this brand! I’ve been using their routers for more than a decade now and it has never failed me.

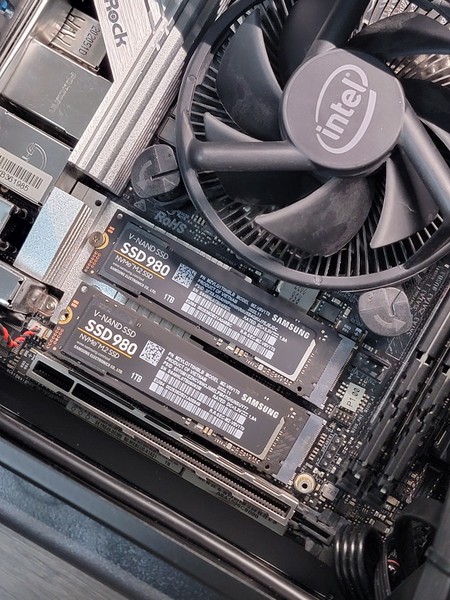

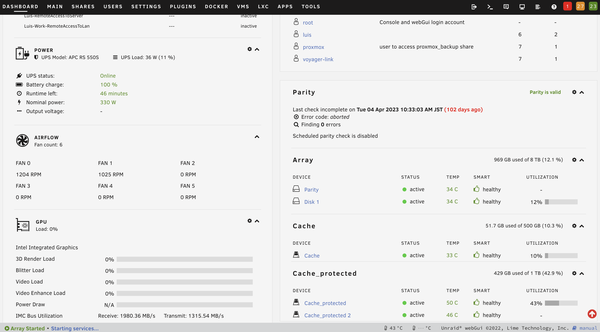

Back to the Unraid server, it had also undergone some few upgrades (the initial specs can be found in one of my first few posts here). First is the addition of a 500GB SSD as an un-mirrored cache drive to avoid wear and tear of the NVMe drives. This is also mainly used for non-critical data such as when storing movies, tv shows, and ISO files, etc.

Second is the installation of a third party heat sink for the NVMe drives which brought down temperature levels by about 5-10C.

Third, the installation of a 330W UPS from APC, allowing graceful shutdown in case of power outages and protection from potential power surges.

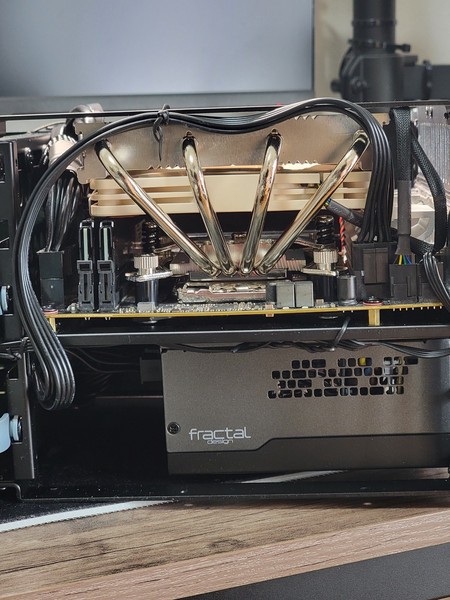

Fourth, the installation of a Noctua NH-L12S cooler to pull down the temperature levels of the CPU.

Lastly, a RAM upgrade to 64GB after experiencing memory shortage when working with GNS3. 32GB wasn’t enough when running multiple containers and spawning multiple VMs on top of GNS3.

Services

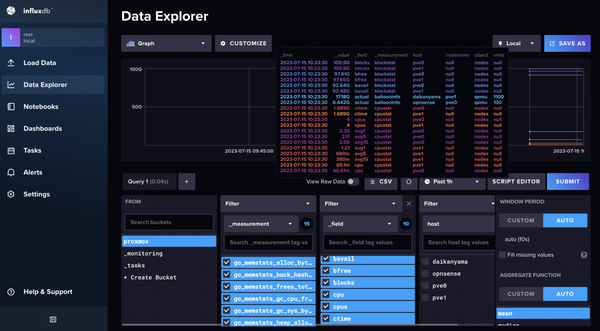

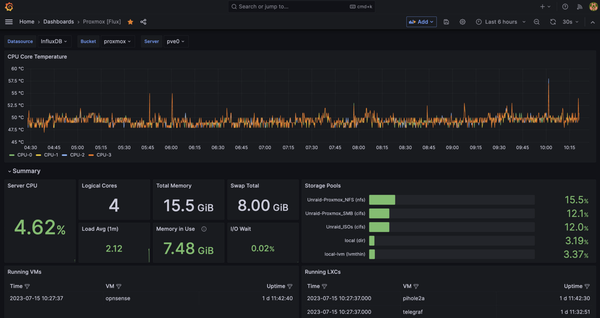

Talking about the services, I am running just a handful. For the monitoring stack I have Telegraf, InfluxDB and Grafana to monitor resource utilization on the Proxmox box. I also have UptimeKuma providing a neat and simple dashboard for monitoring service uptime.

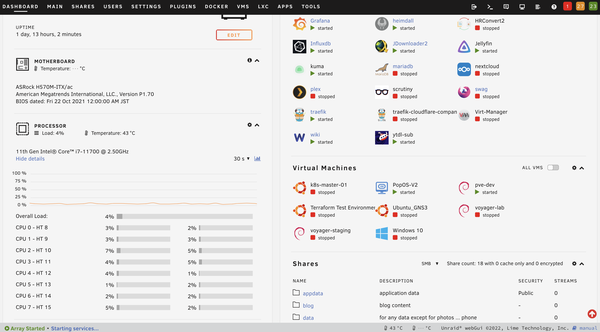

For resource monitoring of my Unraid box, I just use the built-in dashboard which already gives me all the information I need such as CPU and disk temps, CPU, disk, and memory utilization, and power consumption.

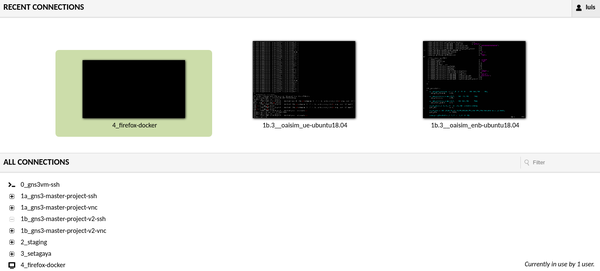

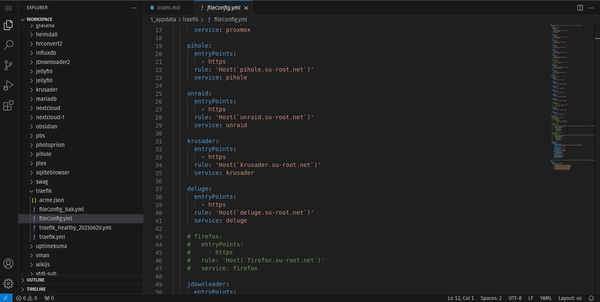

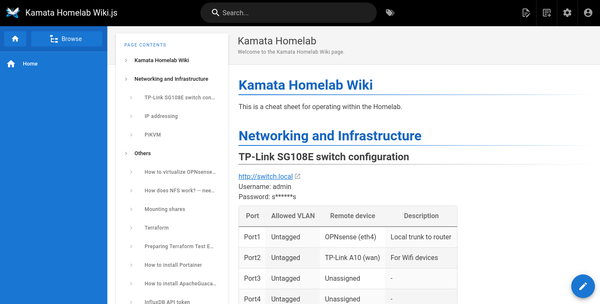

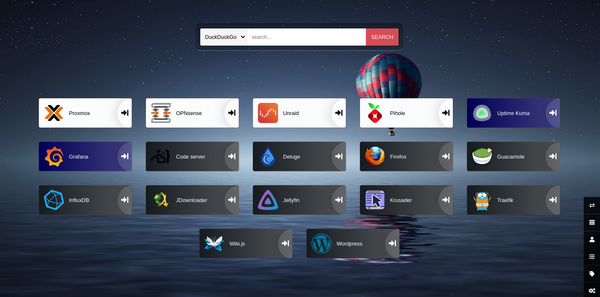

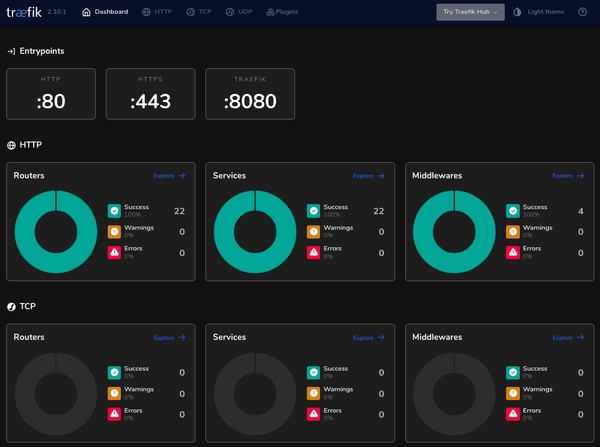

For productivity and utilities, I have Guacamole for VM and command line remote access, Krusader for file management within Unraid, Visual Studio Code for modifying configuration files and blogging, Firefox for VM-less browser access, Wiki.js for saving important information and notes taking of anything related to the Homelab and the projects I am working on, Jdownloader for HTTP downloads (mainly ISO files), Heimdall as my homelab dashboard, and Traefik as my reverse proxy!

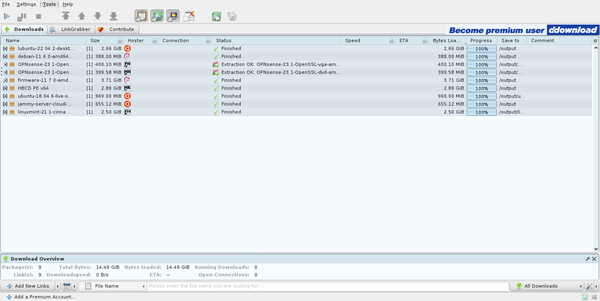

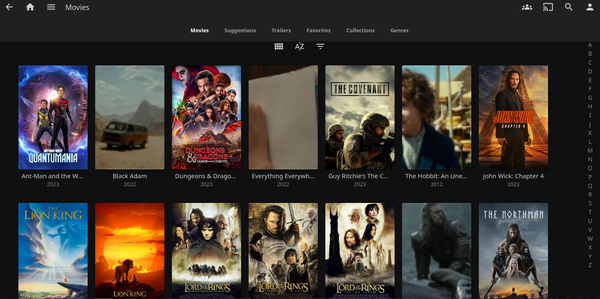

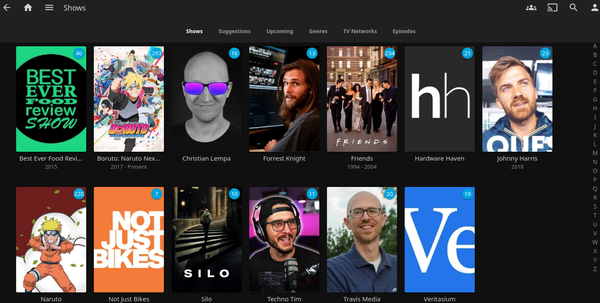

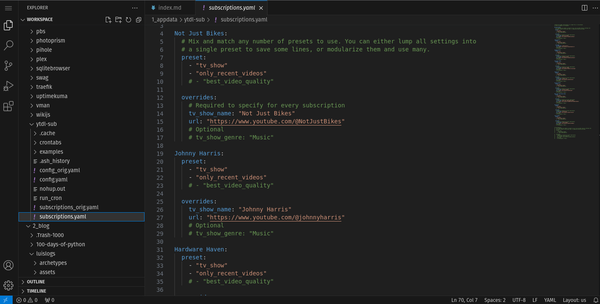

For media services, I have Jellyfin for transcoding and serving media both at home and when on-the-go. To populate my fiance and I’s media inventory with videos from our favorite youtube channels, I use ytdl-sub. This automatically downloads the videos of any defined youtube channel, manages the specified retention period, and adds the necessary metadata for a nice and smooth feel when browsing in Jellyfin. And since the videos are played back locally, this means we also get ad-free content when watching. For legal downloading of media, I have deluge. Since I don’t use this often, I still transfer files manually to the Jellyfin directory.

I am aware I am still lacking in the automated media management department by making use of the *ARR services, but I can’t find time to set them up especially now that this homelab environment keeps me branching out to different projects all at the same time. At the moment I just have bigger fish to fry.

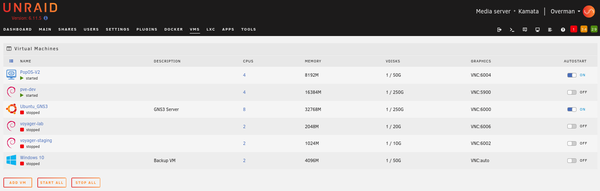

VMs

As for the VMs, I only have quite a few. My main workstation is running PopOS based on Ubuntu. I do use a Macbook at home but still login with RDP to this machine almost all the time since it allows me anytime-anywhere remote access and I can easily pick up where I left off when I am working on something.

Though 99% in shutdown state, I also keep a Windows 10 VM in case I need to use software which can’t run on Linux. I also keep a staging environment running on Debian for the remote tailscale exit node installed back home in Manila. Another one is an Ubuntu VM running GNS3 server for other side projects on kubernetes and networking. My VM disks are created in the NVMe drives allowing me to play around with VMs efficiently in terms of very quick load times. The NVMe drives are also mirrored in RAID1 so I don’t have to worry about losing data when one of the two drives crashes.

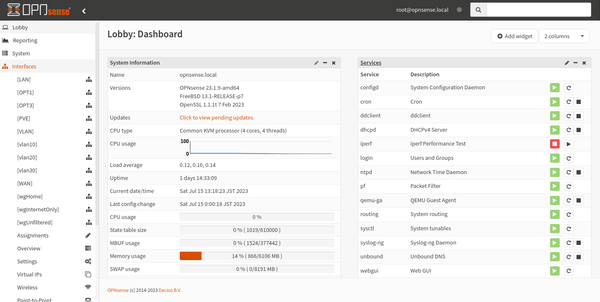

The last but not the least is my router! Running as a VM on Proxmox, it just works wonderfully. I did experience random reboots though, but this got fixed after upgrading the kernel from 5.4 to the new 6.1 together with the intel micro-code package. My longest uptime with it was around 40 days before I had to reboot for maintenance work. Inside OPNsense I am running Unbound caching DNS resolutions for faster downstream queries. The browsing experience became significanly better after enabling this. Websites started to load faster also after enabling DNS over HTTPS. I was trying to find an explanation to this but I will just leave it out in the cold for now. I also have my Cloudflare Dynamic DNS agent running here. For VPN, I have Wireguard allowing me unfiltered access to the entire network. I also use this on daily basis — tunneling at the very least my DNS traffic to Pihole for internet ads blocking.

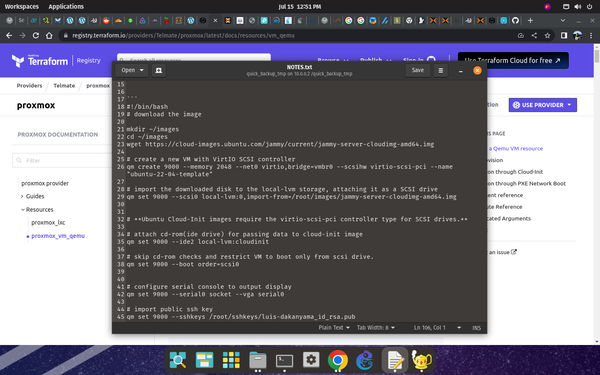

I think that’s all of it for the network’s status quo. Over the next few weeks I will have to wrap my head around Terraform, Ansible, and Kubernetes. This is because I’ll be migrating most, if not all, of these services to a “production” k8s cluster with the help of automation. I’ve been sitting on the fence about this for quite some time now, but I finally decided go with it to have hands-on experience with Infrastructure-as-Code. Is it necessary? Not really, but it sure is interesting.