Last June I shared a post about deploying a 4G core network, exploring containerization of 4G telco applications at home with GNS3. That time GNS3 was acting as another layer of virtualization since it was running as a VM on top of my NAS. This time I’ve decided to convert my main server from a NAS-first equipment to a hypervisor-first solution, allowing me to spin up VMs faster and more efficiently with the help of Terraform and Ansible.

Today I also want to share how I started exploring Cilium’s other features along side open5gs’ 5G core. Luckily there is a link in the official documentation to available helm charts created and uploaded to github by Gradiant. Their tutorial includes existing working values.yaml files to integrate the open5gs applications together with the UERANSIM simulator that also comes in a separate helm chart provided by them.

In this demonstration we will gradually build up our network policies based on the information we will get from Hubble. We will start by applying a DNS rule to see the connections the NFs are trying to make, then we apply a cilium network policy to whitelist that flow. We will keep doing the same thing (check hubble, define CNP, repeat) until we are able to see a successful UE attach.

Preparing the environment

I recently completed the README of my HA K3S project utilizing Terraform and Ansible but overall the project is a work in progress. I still a have a lot of shell commands that I would need to convert to a module and later on clean up the scripts with roles. You can check the github project here or you can follow ahead.

You can also skip this part if you alread have a working k8s cluster, but here I also noted some points that you might find helpful if you are hosting your cluster at home with VMs.

First we will spin up VMs on Proxmox with the help of Terraform. Each VM will have 4 CPU and 4GB RAM with the CPU type set to host to pass-through the required feature set to run the latest version of mongodb. Each will have a 50GB disk and a single vNIC with the IP address and default gateway automatically configured with cloud-init.

Next we execute a preflight script to install some packages, do a couple of other stuff to prepare the environment for use with Ansible.

Then, we execute ansible-playbook -i inventory.yaml k3s-kubevip-helm-ciliumInstallHelmCli.yaml to install K3S, Kube-vip, helm, and Cilium. If you will be using the same ansible script in the k3s-ha repository, do note that Cilium’s kube-proxy replacement doesn’t support SCTP yet, though I have created the playbook for general K3S use. In this case you have to remove or comment this out in the playbook.

Cilium, Hubble, and Open5gs

Before we start deploying open5gs helm charts, let’s make it a prerequisite to install hubble. You can install cilium cli and hubble from your management host where you normally execute your kubectl commands. Note that by default hubble will not monitor the packets. For that to happen we can either annotate the pods or enforce a CiliumNetworkPolicy. We will go with the latter.

Apply the following CiliumNetworkPolicy, l4-egress-to-dns.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-to-dns"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/instance: open5gs

egress:

- toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

Here we will allow DNS traffic on port 53 for resolving the FQDNs of the services to be used with SBI. For now we will not configure the CNP (CiliumNetworkPolicy) for any other port. Note that the L4 DNS rule is also set on the egress of each pod. After the successful DNS resolution by any labeled pod, the succeeding connection attempts should be filtered and dropped by Cilium.

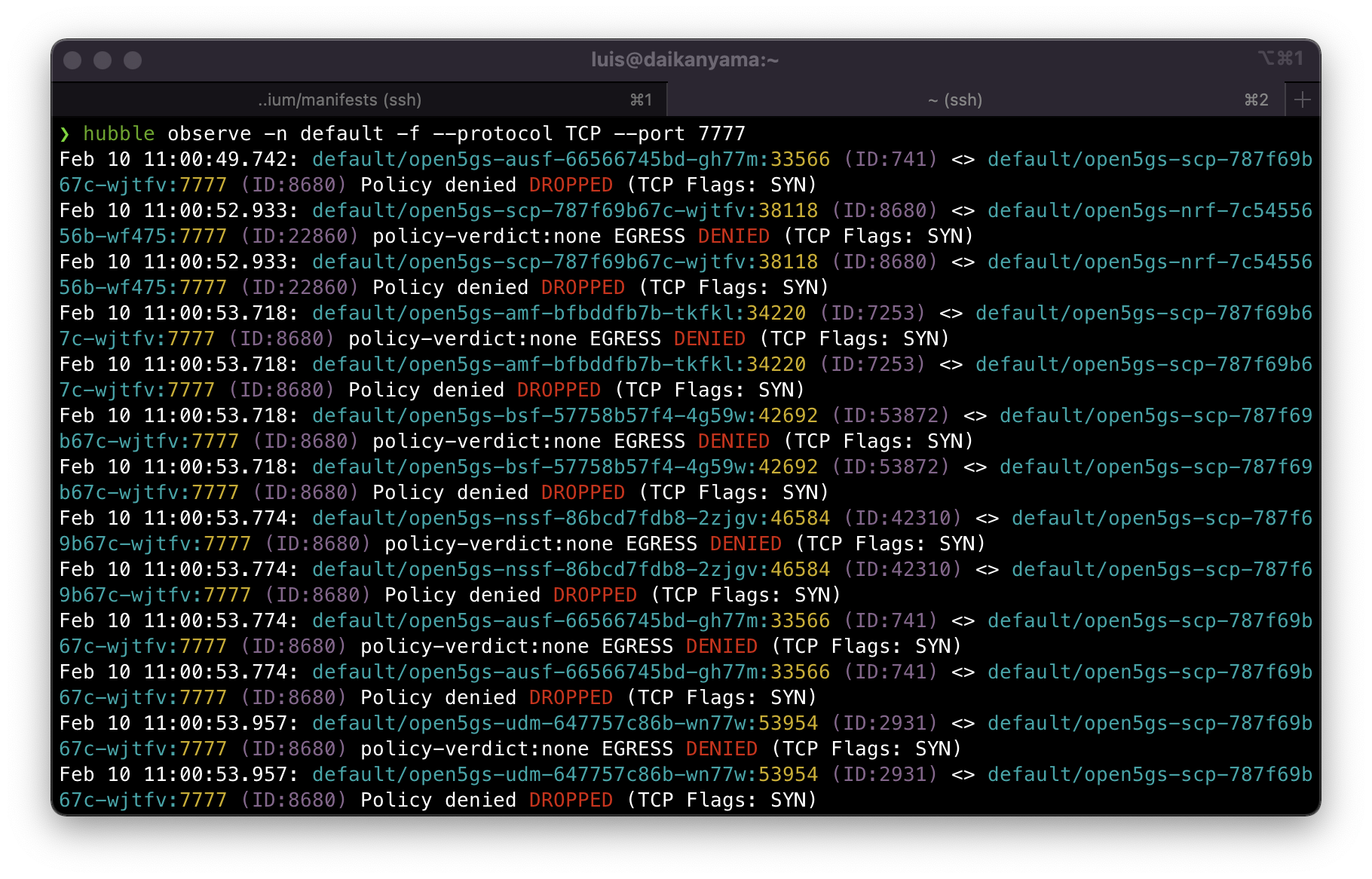

In another terminal window let’s run our hubble command to start monitoring TCP requests that will be initiated for the sending out HTTP POST messages for the NRF registration.

cilium hubble port-forward&

hubble observe -n default -f --protocol TCP --port 7777

Now that is set, let’s deploy our 5G core network:

helm install open5gs oci://registry-1.docker.io/gradiant/open5gs --version 2.2.0 --values https://gradiant.github.io/5g-charts/docs/open5gs-ueransim-gnb/5gSA-values.yaml

Soon enough we should start seeing TCP messages filtered by hubble with the verdict DROPPED since we only allowed DNS until this point. We should be able to see all core components are trying to connect to SCP on port 7777 and SCP trying to connect to NRF on the same port.

Let’s then proceed to create a new L7 CNP that allows this communication. We again configure this on the egress. Note that although CiliumNetworkPolicies are stateful, it only means you can expect the return path of the packet to be allowed as well. Here we need to ensure that the forward path will be allowed.

But just right before that let’s define a label for each core component that is expected to have an SBI. We can then match this label when we apply our network policy. Let’s patch each of those component with the label sbi: enabled

kubectl patch deployment open5gs-amf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-ausf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-bsf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-nrf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-nssf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-pcf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-scp --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-smf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-udm --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-udr --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

kubectl patch deployment open5gs-upf --patch '{"spec": {"template": {"metadata": {"labels": {"sbi": "enabled"}}}}}'

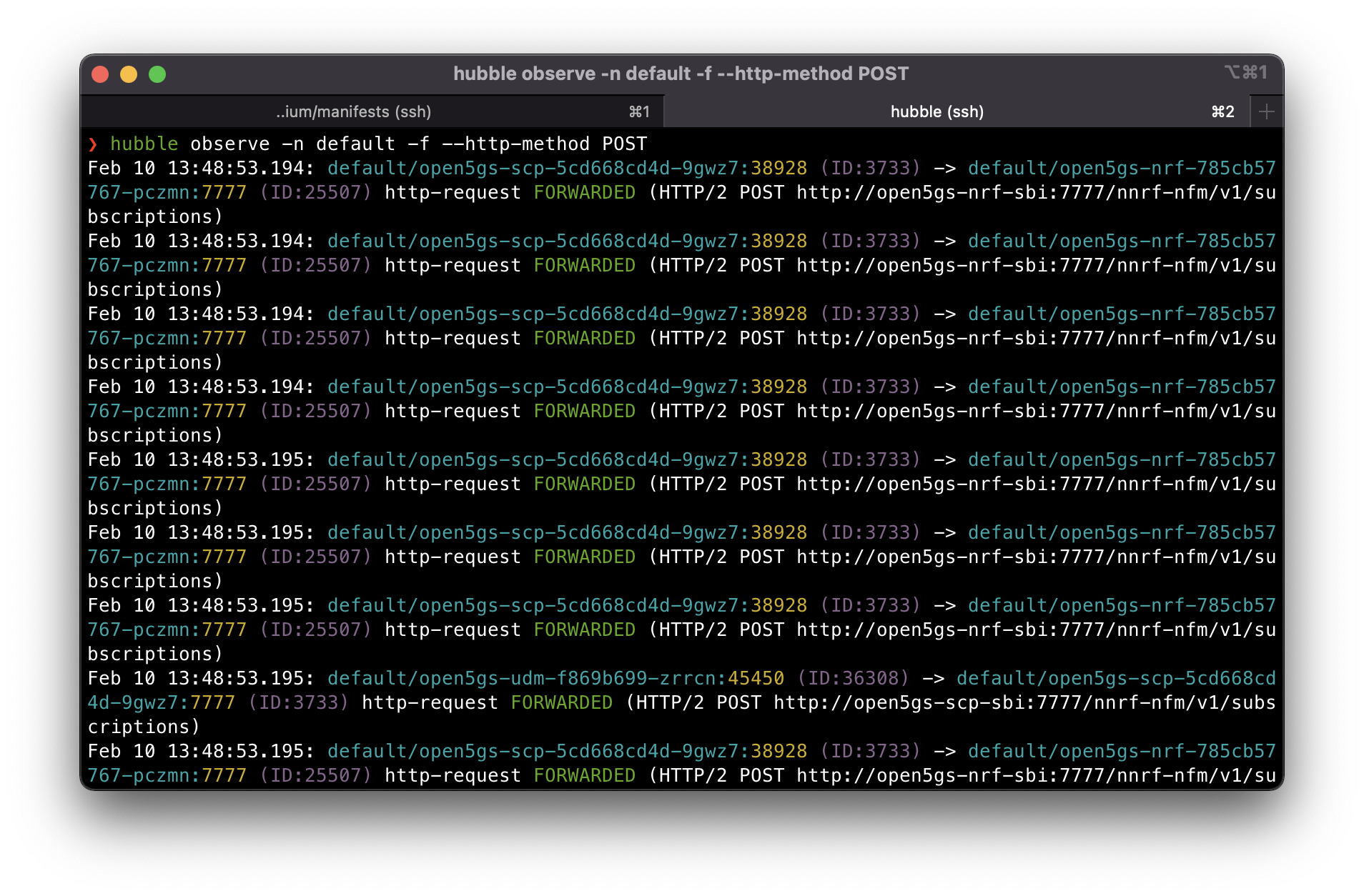

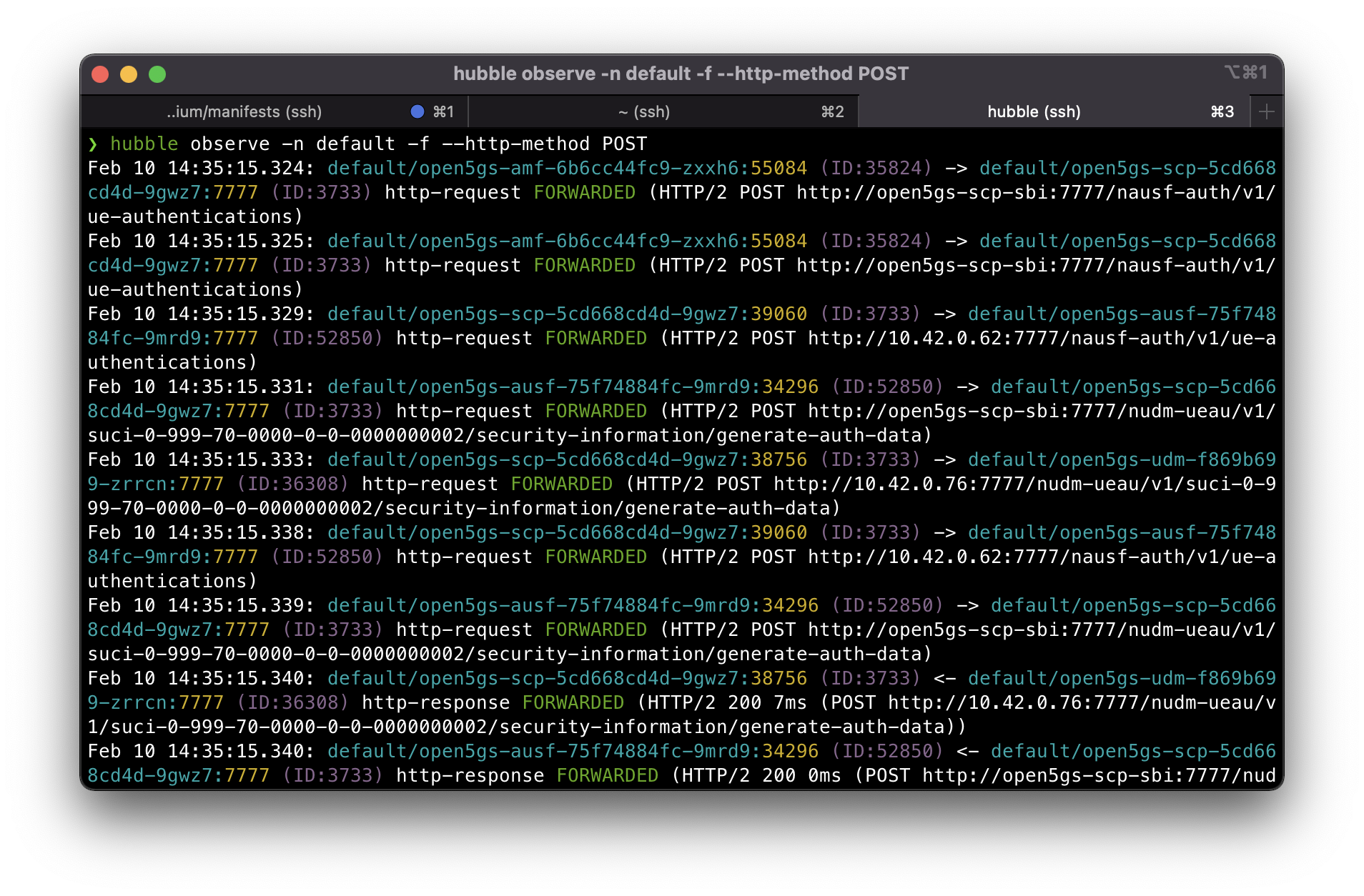

Let’s start monitoring HTTP POST requests this time with:

hubble observe -n default -f --http-method POST

Apply the following CNP, l7-egress-to-scp.yaml, l7-egress-scp-to-nrf.yaml, making use of the label we just defined:

l7-egress-to-scp.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l7-egress-to-scp"

spec:

endpointSelector:

matchLabels:

sbi: enabled

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: scp

toPorts:

# For SBI communication

- ports:

- port: "7777"

protocol: TCP

rules:

http: [{}]

l7-egress-scp-to-core.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l7-egress-scp-to-core"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: scp

egress:

- toEndpoints:

- matchLabels:

sbi: enabled

toPorts:

# For SBI communication

- ports:

- port: "7777"

protocol: TCP

rules:

http: [{}]

Let’s also configure another CNP to allow ingress HTTP traffic only if it’s coming from the SCP.

l7-ingress-from-scp.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l7-ingress-from-scp"

spec:

endpointSelector:

matchLabels:

sbi: enabled

ingress:

- fromEndpoints:

- matchLabels:

app.kubernetes.io/name: scp

- matchLabels:

sbi: enabled

toPorts:

# For SBI communication

- ports:

- port: "7777"

protocol: TCP

rules:

http: [{}]

We will also allow port 27017 used by mongodb to be accessed by UDR, PCF, and WebUI.

l4-egress-populate-webui-udr-pcf-to-mongodb.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-populate-to-mongodb"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/component: populate

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: mongodb

toPorts:

# For mongodb access

- ports:

- port: "27017"

protocol: TCP

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-webui-to-mongodb"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: webui

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: mongodb

toPorts:

# For mongodb access

- ports:

- port: "27017"

protocol: TCP

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-udr-to-mongodb"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: udr

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: mongodb

toPorts:

# For mongodb access

- ports:

- port: "27017"

protocol: TCP

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-pcf-to-mongodb"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: pcf

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: mongodb

toPorts:

# For mongodb access

- ports:

- port: "27017"

protocol: TCP

kubectl apply -f l7-egress-to-scp.yaml -f l7-ingress-from-scp.yaml -f l7-egress-scp-to-nrf.yaml -f l4-egress-to-mongodb.yaml

HTTP2 messages look fine. Here we can see each application performing NF registration with NRF via SCP (acting as proxy).

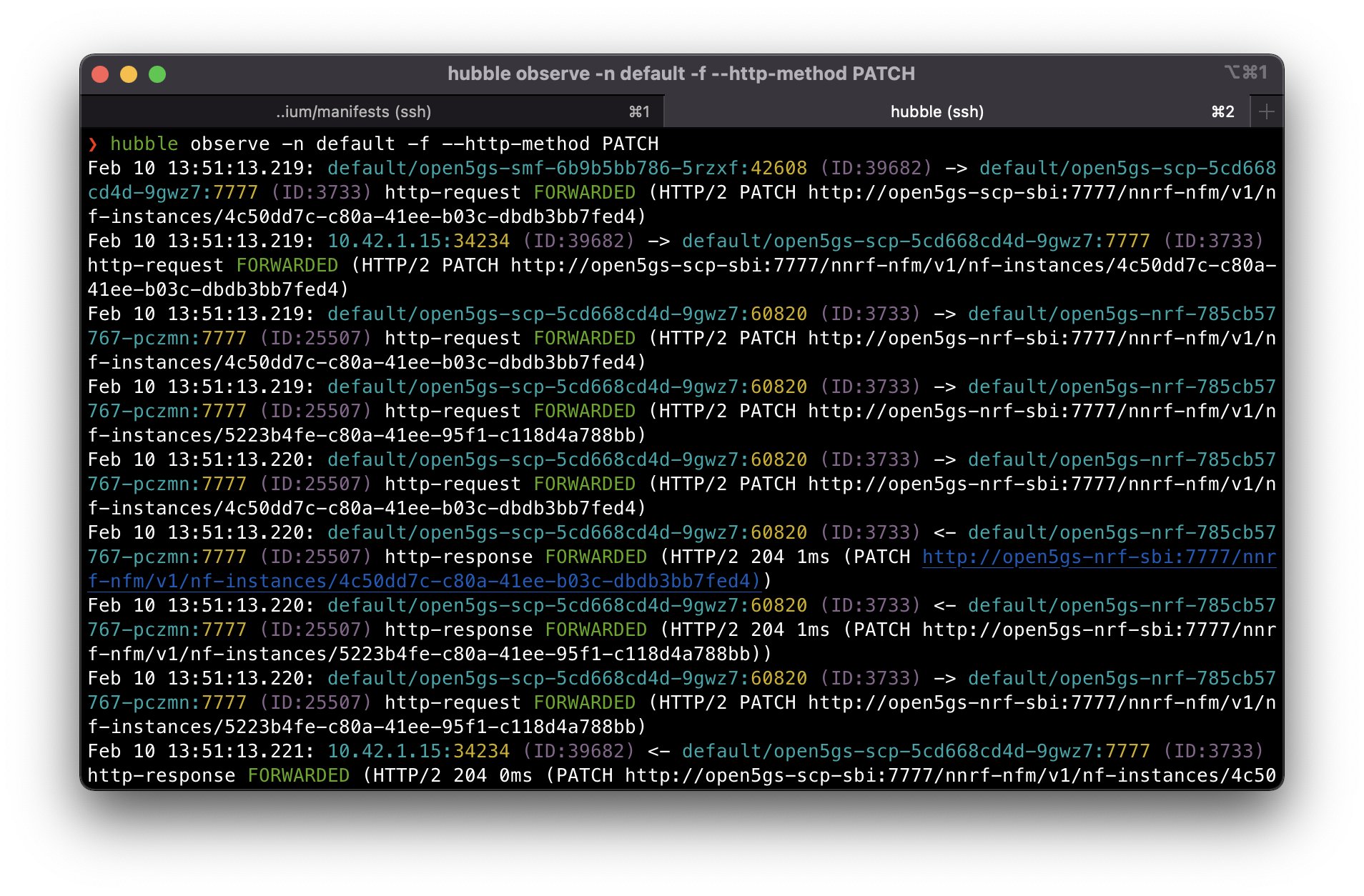

We can also check the heartbeat messages by changing the http method to PATCH.

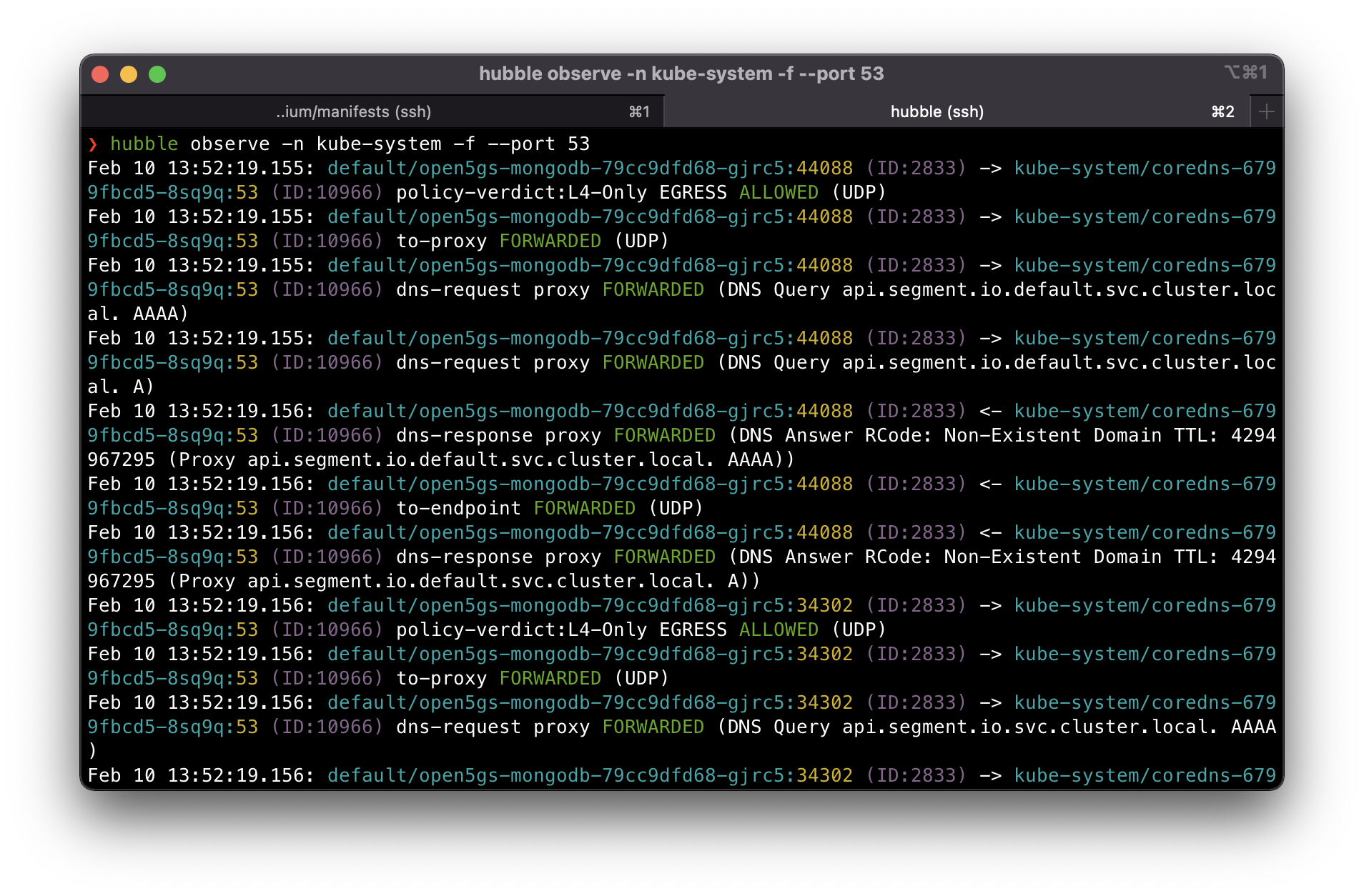

If you also want to see the DNS flow you can execute hubble observe -n kube-system -f --port 53.

Next we deploy the UERANSIM GNB and UE pods.

helm install ueransim-gnb oci://registry-1.docker.io/gradiant/ueransim-gnb --version 0.2.6 --values https://gradiant.github.io/5g-charts/docs/open5gs-ueransim-gnb/gnb-ues-values.yaml

If you follow the instructions you should expect a new tunnel interface inside the UE pod. But you most likely won’t and if you check the gNB logs, you should see an error “Cell selection failure, no suitable or acceptable cell found”.

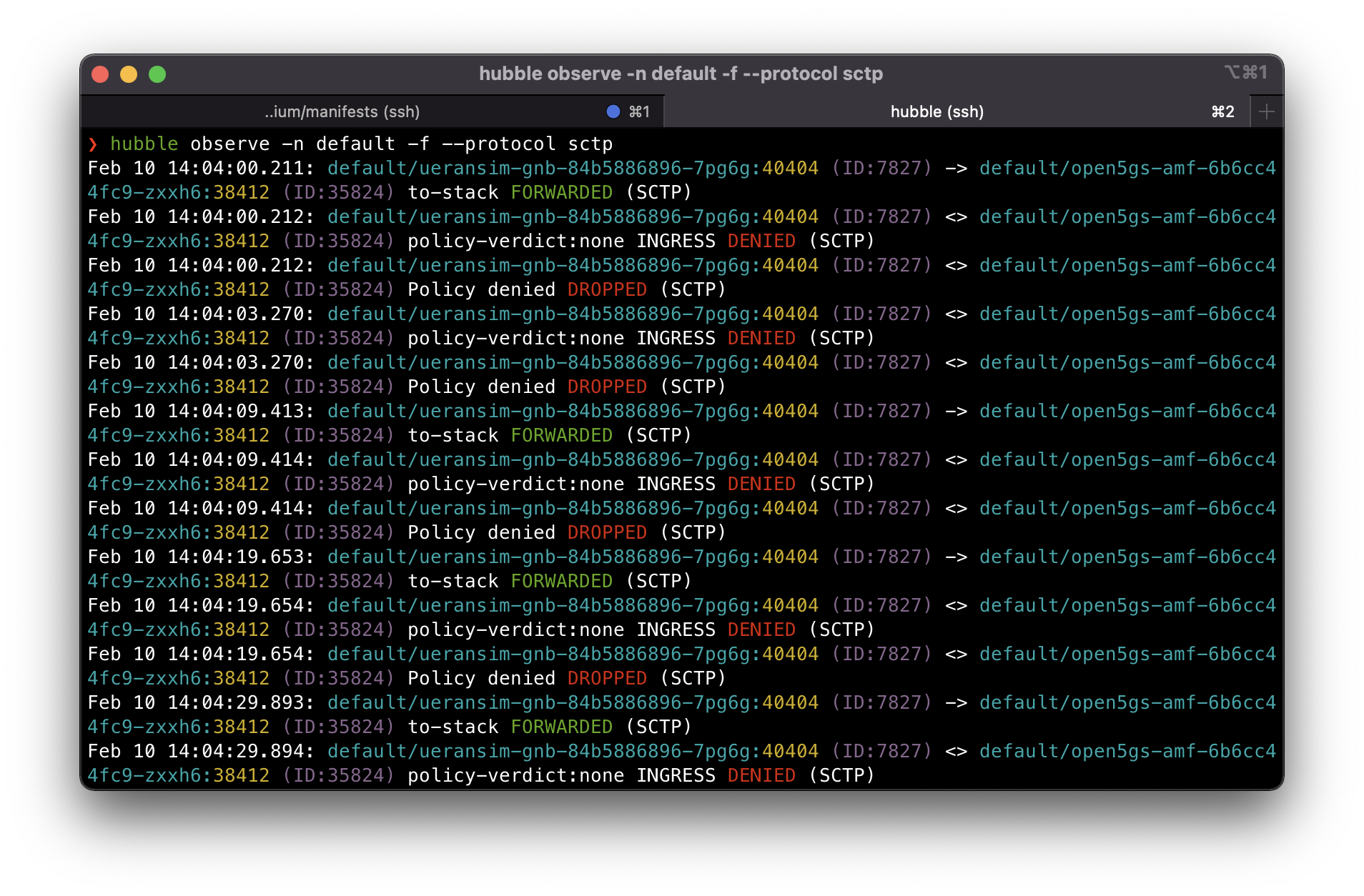

We can check first if gNB is able to connect successfully to the AMF. gNB connects via SCTP so we can filter that with hubble:

hubble observe -n default -f --protocol sctp

It’s getting dropped due to the L7 ingress rule we applied. You might have thought for a while why it’s dropping the packet if it’s an L7 rule even if SCTP is on L4. Network policies actually work as a whitelist, so everything else not part of the policies we are applying gets dropped by default the moment you apply the rule on either the ingress or egress direction.

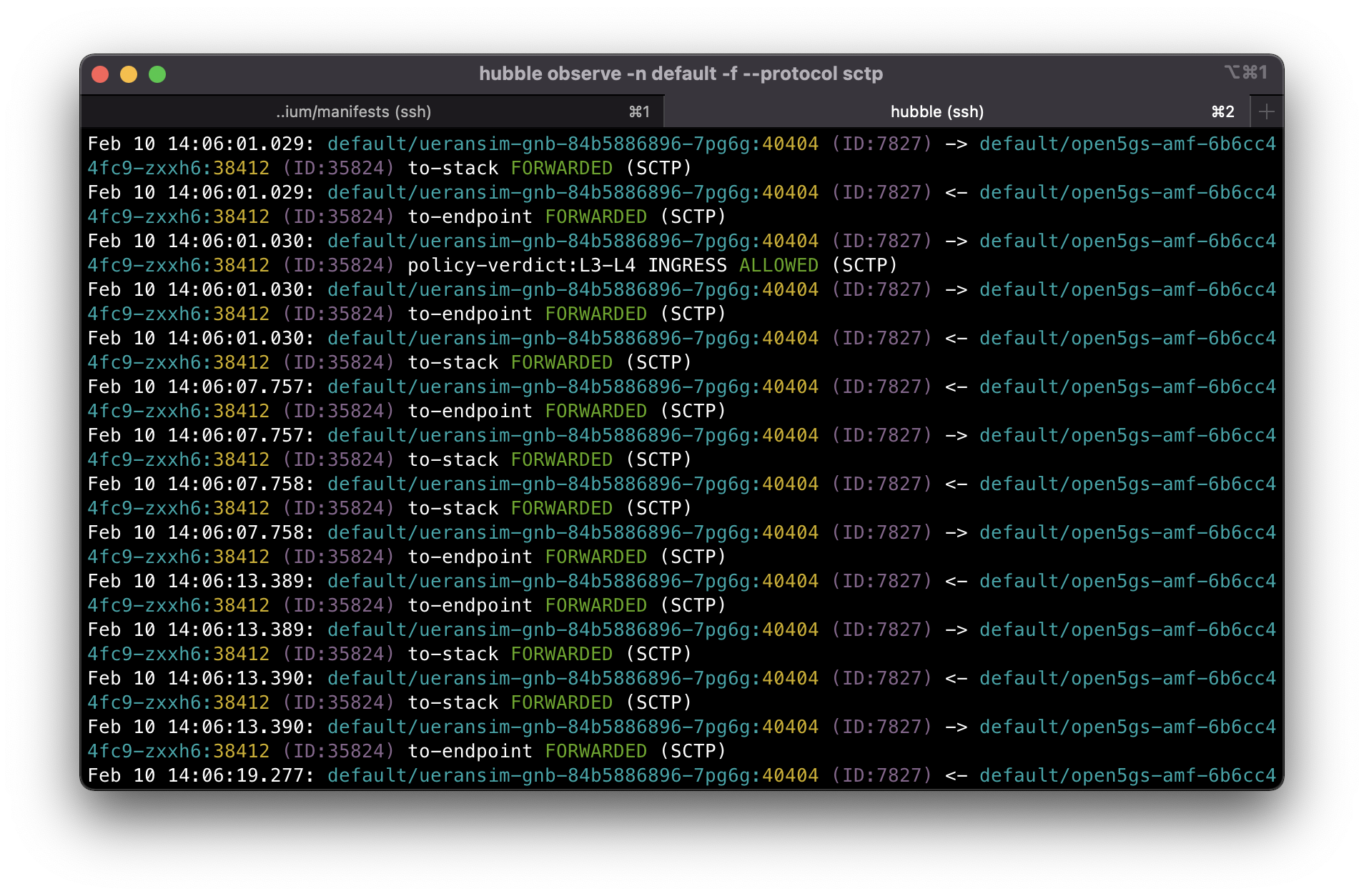

Let’s go apply the SCTP rule!

l4-ingress-amf-from-gnb-sctp.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-ingress-from-gnb-sctp"

namespace: default

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: amf

ingress:

- fromEndpoints:

- matchLabels:

app.kubernetes.io/name: ueransim-gnb

toPorts:

- ports:

- port: "38412"

protocol: SCTP

OK, the SCTP association looks established now.

We can also see successful UE registration:

But still no tunneled interface. Let’s check for other dropped packets:

hubble observe -n default -f --verdict DROPPED

What’s getting dropped this time? We forgot about the SMF to UPF communication. From UPF we should also allow all ports to any destination at least on the egress side. Apart from this the gNB should also be allowed to communicate with the UPF for use traffic.

l4-egress-smf-to-upf.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-egress-smf-to-upf"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: smf

egress:

- toEndpoints:

- matchLabels:

app.kubernetes.io/name: upf

toPorts:

# For SMF-UPF internet traffic

- ports:

- port: "8805"

protocol: UDP

l4-ingress-upf-from-smf.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-ingress-upf-from-smf"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: upf

ingress:

- fromEndpoints:

- matchLabels:

app.kubernetes.io/name: smf

toPorts:

# For SMF-UPF internet traffic

- ports:

- port: "8805"

protocol: UDP

l4-ingress-upf-from-gnb.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l4-ingress-upf-from-gnb"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: upf

ingress:

- fromEndpoints:

- matchLabels:

app.kubernetes.io/component: gnb

toPorts:

# For SMF-UPF internet traffic

- ports:

- port: "2152"

protocol: UDP

l3-egress-upf-to-any.yaml:

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "l3-egress-upf-to-any"

spec:

endpointSelector:

matchLabels:

app.kubernetes.io/name: upf

egress:

- toCIDRSet:

- cidr: 0.0.0.0/0

# except private CIDR

except:

- 10.0.0.0/8

- 172.16.0.0/12

- 192.168.0.0/16

For the UPF we allow all IP addresses except for the private CIDR blocks.

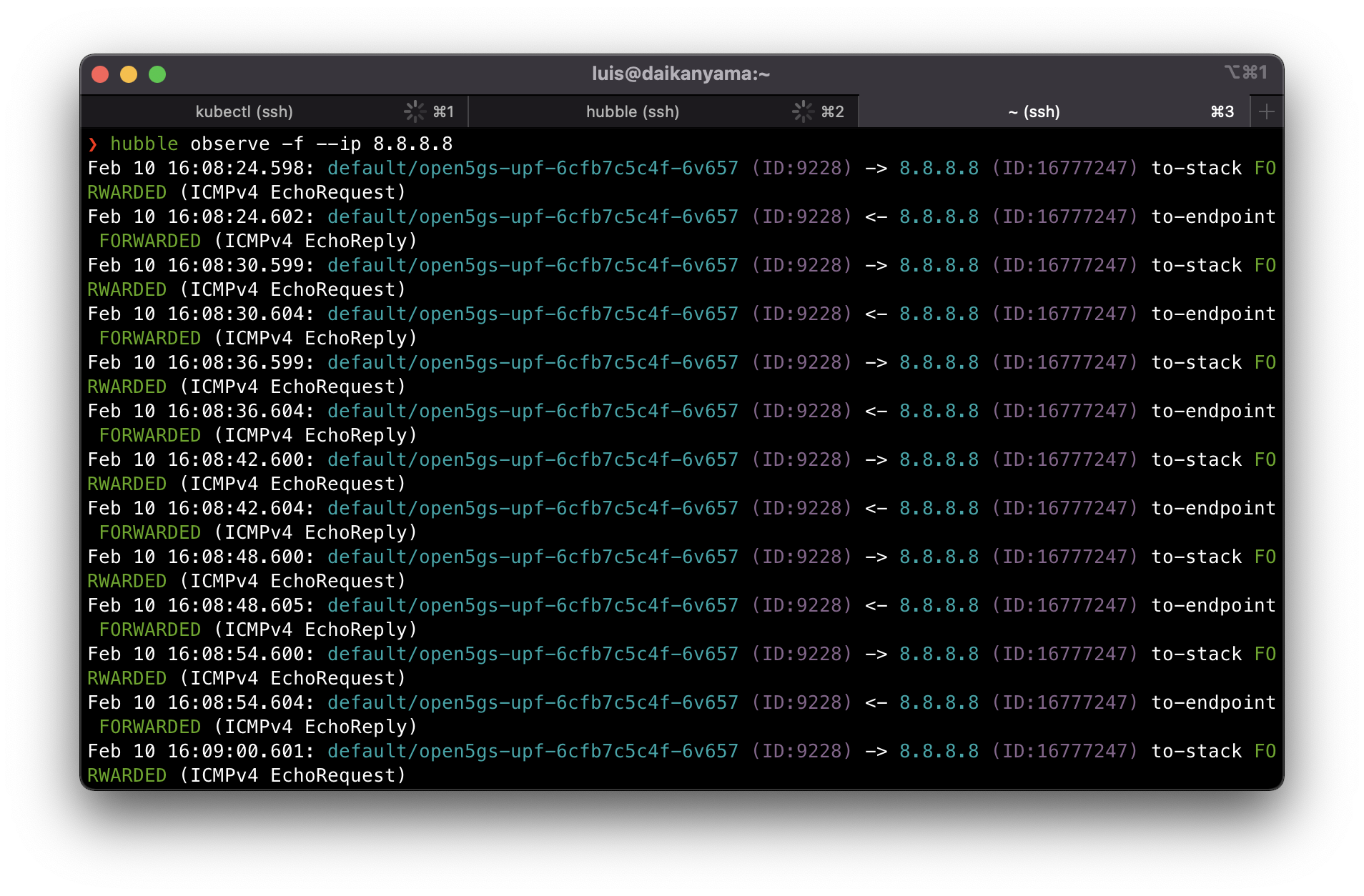

Let’s try one more time. It should be successful now. This time let’s monitor all traffic related to 8.8.8.8: hubble observe -f --ip 8.8.8.8. From the UE we initiate with ping -I uesimtun0 8.8.8.8.

For the last part we try to attach an unprovisioned SUPI. This time we can filter http status code 404 to verify:

hubble observe -f -n default --http-status 404

Deploy the UE pods with unknown SUPI:

helm install -n default ueransim-ues-not-defined oci://registry-1.docker.io/gradiant/ueransim-ues \

--set gnb.hostname=ueransim-gnb \

--set count=2 \

--set initialMSISDN="0000000003" \

We can see here 404 response received at UDM from UDR, AUSF from UDM, and at AMF back from AUSF.

To sum up all the cilium network policies we applied, you can refer to the below list:

NAME

l3-egress-upf-to-any

l4-egress-pcf-to-mongodb

l4-egress-populate-to-mongodb

l4-egress-smf-to-upf

l4-egress-to-dns

l4-egress-udr-to-mongodb

l4-egress-webui-to-mongodb

l4-ingress-amf-from-gnb-sctp

l4-ingress-upf-from-gnb

l4-ingress-upf-from-smf

l7-egress-scp-to-core

l7-egress-to-scp

l7-ingress-from-scp

Cilium (with Hubble) is awesome

To conclude, we have demonstrated how we can make use of network policies to add another layer of security to our network. We managed to create new policies, applying them on the ingress or egress wherever necessary, making use of L3, L4, and L7 rules to whitelist the traffic. At the same time we also utilized hubble to have a good peek into the network layer, allowing us to understand how network policies work and see which packets are getting allowed, dropped, or rejected. We made use of different filters working on protocol level, http method, http status, port. This demonstration doesn’t cover the full capability of hubble and there is a ton of other different filters you can use for observability. And the good thing is the project is in very active development and the guys over at Isovalent seem to add cool new features every time they have a new release. I will not be surprised if Cilium becomes the primary choice for CNI for any new project. Stay tuned for upcoming posts as we explore more other Cilium features!