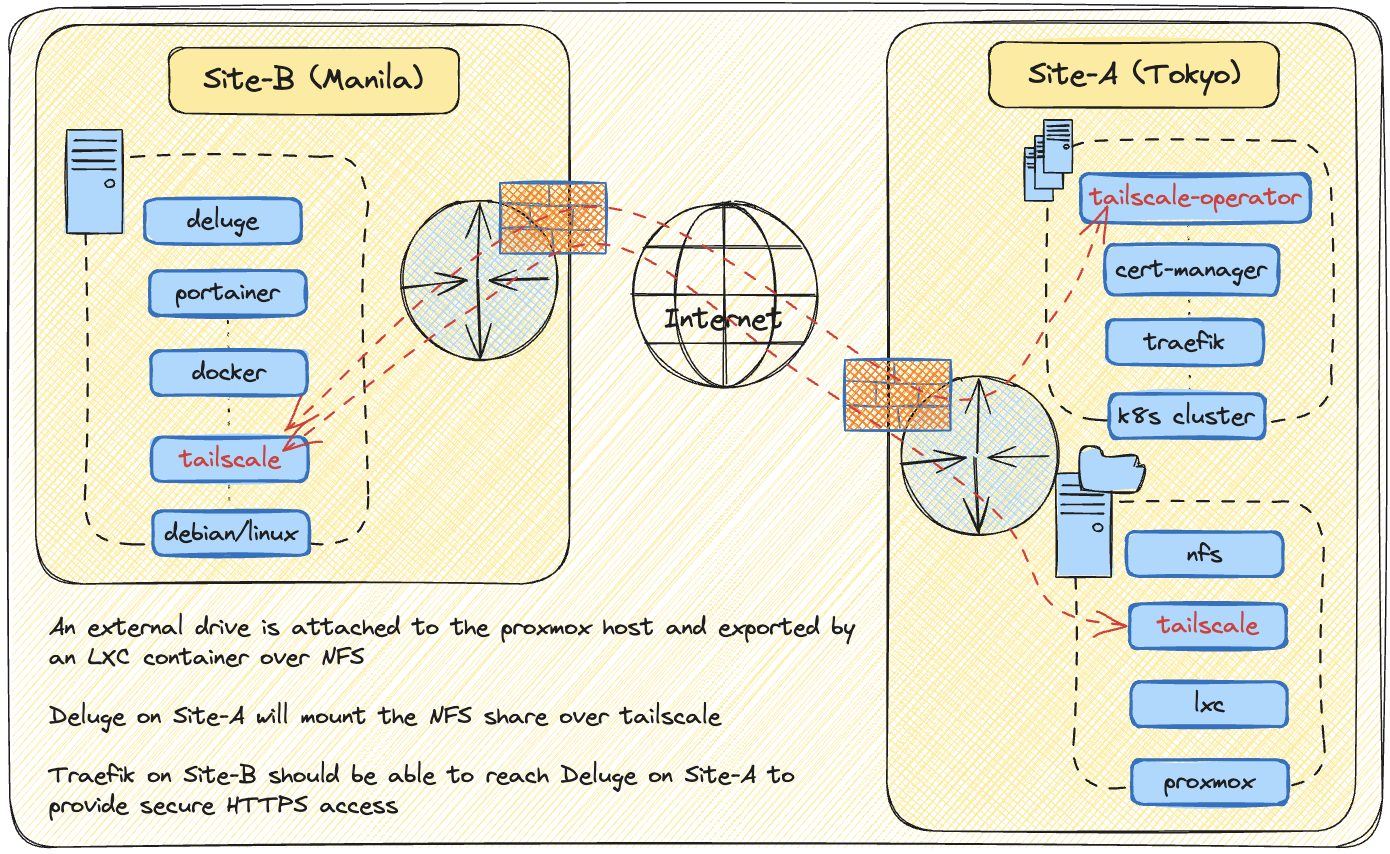

The homelab has not undergone any major change in recent months due to personal reasons, but now is a good time to get back on track. Today I want to share how I managed to connect a completely remote server to my Kubernetes cluster and how it’s able to directly write to my home NAS with the help of Tailscale.

This might come in handy to those who are thinking of setting up their own VPS to connect to their K8s cluster, whether it be hosted at home or in the cloud, or maybe to those who just simply want to connect two mutually remote devices running on two different networks with a firewall.

To start off, first, keep in mind that my NAS in the backend is exported via NFS by an LXC container hosted on Proxmox, and the remote service (Deluge in this case) that would be mounting the share is running as a docker container.

Tailscale pre-requisites for LXC containers on PVE

On the NFS server end, the container needs access to /dev/net/tun on the host. To allow this we need two lines in the container configuration (reference: tailscale kb article).

lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file

lxc.cgroup2.devices.allow: c 10:200 rwm

Stop the container and hop on to your PVE host’s terminal. Modify /etc/pve/lxc/1201.conf and the above two lines.

Tailscale installation on the LXC container and remote server

Next up is installation of tailscale. The installation is fairly easy. An installation command can be generated together with a pre-approved authentication. Go to your Tailscale account > Machines > Add device > Generate install script. Ensure to create a reusable key. There is no need to add tags if the device is intended for a single user. Execute on both the LXC container and remote server

e.g.

curl -fsSL https://tailscale.com/install.sh | sh && sudo tailscale up --auth-key=tskey-auth-kHD2rsVNiv11CNTRL-188WY38WSG2iqTHTZs1oF2e3LMSmMYuXF

You should now be able to see your device under the Machines tab. The tailscale IP will also be visible from there. If you want to check the status of tailscale: sudo systemctl status tailscaled

To avoid manual intervention in the future, you can disable the key expiry for each device in the Machines tab.

Configure NFS

Allow the tailscale IP in your /etc/exports file:

/mnt/nfs-share 100.22.112.36/32(rw,sync,no_subtree_check,all_squash,anonuid=1000,anongid=1000,insecure)

On the remote server, ensure the containers are stopped and modify your compose file to mount the nfs share to the /downloads directory.

version: "2.1"

services:

deluge:

image: lscr.io/linuxserver/deluge:latest

container_name: deluge

environment:

- PUID=1000

- PGID=1000

- TZ=Asia/Manila

- DELUGE_LOGLEVEL=error

volumes:

- .:/config

- nfsdir:/downloads

ports:

- 8112:8112

- 6881:6881

- 6881:6881/udp

restart: unless-stopped

volumes:

nfsdir:

driver: local

driver_opts:

type: nfs

o: addr=100.22.112.36,rw,vers=4.1

device: ":/mnt/nfs-share"

Start the container and try to create a file in the NFS share.

Install tailscale-operator on k8s

It will be easier for you to refer to the official documentation so I am sharing the actual KB article here.

In summary, two items are needed to be configured from the Tailscale Management page — tags and OAuth client credentials. Complete these steps and carry on with the helm installation. Helm installation steps are also shared below.

Installation with helm

Replace the clientId and clientSecret variables:

helm repo add tailscale https://pkgs.tailscale.com/helmcharts

helm repo update

helm upgrade \

--install \

tailscale-operator \

tailscale/tailscale-operator \

--namespace=tailscale \

--create-namespace \

--set-string oauth.clientId="<OAauth client ID>" \

--set-string oauth.clientSecret="<OAuth client secret>" \

--wait

Create a ProxyGroup of type egress.

apiVersion: tailscale.com/v1alpha1

kind: ProxyGroup

metadata:

name: ts-proxies

spec:

type: egress

replicas: 3

Installation with ArgoCD

If you are tidy, maintain your infrastructure as code, and use ArgoCD, then go ahead and download the values.yaml file and update the clientId and clientSecret fields.

helm show values tailscale/tailscale-operator > values.yaml

...

oauth:

clientId: "kq7LLBiMeL11CNTRL"

clientSecret: "tskey-client-kq7LLBiMeL11CNTRL-rPFdRtSCj74REgir443J84CYp12Lo8Vce"

...

Create the manifest for your ArgoCD application (replace yourgituser and yourrepo).

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: tailscale

namespace: argocd

spec:

destination:

namespace: tailscale

server: https://kubernetes.default.svc

project: default

sources:

- repoURL: 'https://pkgs.tailscale.com/helmcharts'

chart: tailscale-operator

targetRevision: 1.78.3 # This is the latest version as of the time of this post.

helm:

releaseName: tailscale-operator

valueFiles:

- $values/tailscale/values.yaml

- repoURL: [email protected]:yourgithubuser/yourrepo.git

targetRevision: HEAD

ref: values

- repoURL: [email protected]:yourgithubuser/yourrepo.git

path: tailscale/manifests

targetRevision: HEAD

syncPolicy:

automated:

prune: true

syncOptions:

- CreateNamespace=true

Don’t forget to create the egress ProxyGroup manifest!

Testing connectivity from a pod to the remote server

Login to any pod and perform a curl to the other end. If you don’t have a test pod, then you can deploy an alpine image.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: alpine

name: alpine

namespace: alpine

spec:

replicas: 1

selector:

matchLabels:

run: alpine

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

run: alpine

spec:

containers:

- image: alpine:latest

command:

- /bin/sh

- "-c"

- "apk --update add curl && sleep 1440m"

imagePullPolicy: IfNotPresent

name: alpine

kubectl -n alpine exec -it alpine-9c7cb4b95-nx7b4 -- curl http://10.43.59.234:8112

Pod to tailscale communication

Now that I have a secondary network attachd to my cluster, I can utilize my ingress controller, that is Traefik, to provide HTTPS access to devices on that network.

To do so traefik must be allowed to reach ExternalNames. Set providers.allowExternalNameServices to true in the values.yaml file:

...

providers:

kubernetesIngress:

enabled: true

allowExternalNameServices: true

...

Create the ingress resource as you would normally do, and also create a service of type ExternalName. You can either provide the IP or the tailscale FQDN.

In case of the latter:

apiVersion: v1

kind: Service

metadata:

annotations:

tailscale.com/tailnet-fqdn: "voyager.taile9a23.ts.net"

tailscale.com/proxy-group: "ts-proxies"

name: ts-egress-voyager

spec:

externalName: voyager-deluge # any value - will be overwritten by the operator

type: ExternalName

ports:

- port: 8112

protocol: TCP

name: web

Note that you have to specify the ProxyGroup that was created in the previous step. Also an additional clusterIP service will be created on top of this:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ts-egress-voyager ExternalName <none> ts-ts-egress-voyager-mgmz2.tailscale.svc.local 8112/TCP 2s

ts-ts-egress-voyager-mgmz2 ClusterIP 10.43.231.143 <none> 8112/TCP 2s

This is the final step and you should now be able to access Deluge on the remote server via HTTPS.

Tailscale Lovin'

What I love about tailscale is how easy it is to setup and the how the folks over there continue to offer a free plan for personal use. I still remember how difficult it was to setup a VPN with friends 15-20 years ago just to be able to play games like Counter-Strike or Starcraft (maybe I was not just as experienced yet? I don’t know!) But with tailscale today, it’s totally a game changer. I also want to mention here that tailscale offer Mullvad VPN access for $5/month per 5 devices. Although I am not sure if this is supported by the tailscale-operator, at the least all your other devices can utilize this (you can even install tailescale on the AppleTV!). I probably will consider subscribing to this in the not so distant future if I don’t find any other use case for remote Deluge server.